Google File System

UCL Course COMP0133: Distributed Systems and Security LEC-09

Motivation

Webs need to be crawled and stored in “one huge disk” (aggregate storage capacity)

Searches from users need to be processed by “one powerful CPU” sSpread search processing across many CPUs)

Common PCs are cheaper than Custom parallel supercomputer

such that Google File System is designed for sharing data on clusters (hundreds of common PCs)

Failures

-

Software Problems (e.g., application bugs, operating systems bugs, etc.)

-

Hardware Problems (e.g., failures in disk, memory, network, power supply, connector, etc.)

-

Human Problems

Design Criteria

-

Detect & Tolerate & Recover from failures automatically

-

Large files (more than 100 MB) —– different from local UNIX file system

-

Large & Streaming Reads —– different from small random reads

Usually more than 1 MB & Applications read through contiguous regions in the file

-

Large & Sequential Writes (Appends) —– different from small random writes

Usually more than 1 MB & Files are seldom modified again once written

-

Concurrent appends by multiple clients (e.g., producer-consumer queues)

such that GFS wants atomicity for appends without synchronization among clients

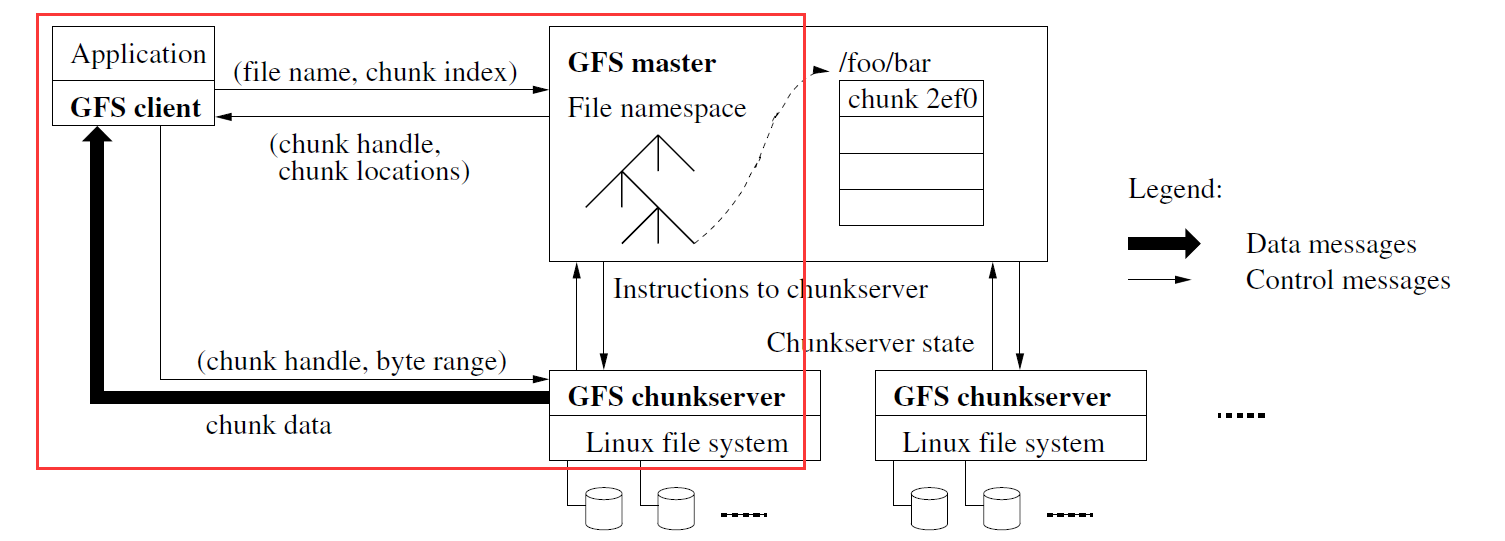

Architecture

-

One Master server (with backups hold replicated state)

-

Many Chunk servers

-

spread across racks

-

intra-rack (inside one rack) bandwidth is greater than inter-rack (between racks) bandwidth

-

Chunk: 64 MB portion of file (identified by 64-bit and globally unique ID chunk handle)

-

Components

Master Server

All metadata stored in RAM for performance

-

The file and chunk namespaces (with access control information per file)

-

The mapping from files to chunks

-

The current locations of each chunk replicas

Log

-

Master server logs all client requests that modify metadata to disk sequentially

-

Master server replicates log entries to remote backup servers

-

Master server replies to client only after log entries safe on disk on self and backups

Chunk Lease Management for chunk servers

-

If no outstanding lease when client requests write, master grants new one

-

Chunks have version numbers

-

Stored on disk at master and chunkservers

-

Each time master grants new lease, increments version, informs all replicas

-

-

Master can revoke leases early (e.g., when client requests rename or snapshot of file)

Garbage Collection for orphaned chunks (chunk offline when a delete operation happens)

Chunk Migration between chunk servers

Chunk Servers

Store 64 MB file chunks on local disk using standard Linux filesystem, each with version number and checksum

Read/Write requests specify chunk handle and byte range

Chunks replicated on configurable number of chunkservers (default: 3)

No caching of file data since too large for standard Linux buffer cache

Client

-

Issue Control Messages to Master server

and Issue Data Messages to chunk servers

-

Cache metadata (reduce load on single master)

but NOT cache data (no consistency difficulties among clients & not help for streaming reads or sequential writes)

-

Client API

Not UNIX semantics (not using inode)

-

open, delete, read, write

-

snapshot: creates a copy of a file quickly (old versions still accessible in the future)

-

Record Append: at least once and possibly with gaps

and/or inconsistencies among clients but keep correctness

-

Read Operation

Process

-

Client sends

{ filename, chunk index (offset) }to Master (if not cached) -

Master finds chunk handle for the offset, and replies with

{ chunk handle, chunk locations }

(only those with latest version) -

Client caches

{ chunk handle, chunk locations } -

Client sends request to the closest Chunk server with

{ chunk handle, byte range }“closest” determined by the IP addresses on simple rack-based network topology

-

Chunk server replies the chunk data

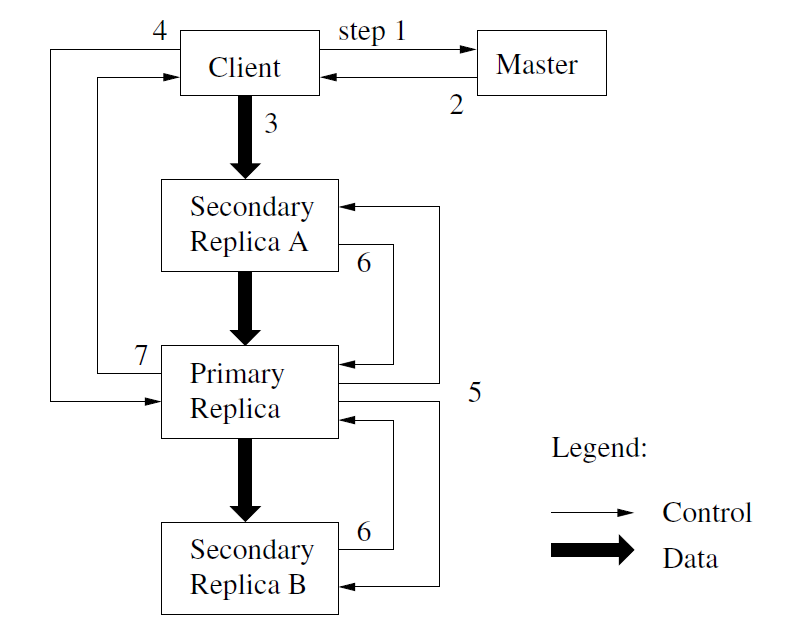

Write Operation

Chunk Lease

Some Chunk server is Primary Replica for each chunk

where the chunk lease (timeout: 60s) is granted by Master server

through periodic heartbeat messages between Master server and all Chunk servers

Process

-

Client asks Master about the last chunk of the file

-

Master tells Client the Primary Replica and Secondary Replicas

-

Client sends data to Primary Replica and Secondary Replicas in daisy chain,

and waits for acknowledgement from all replicasDaisy Chain + Pipelined

Client sends data to closest replica (could Primary or Secondary),

the replica forwards to another replica, continue until all receivewhich takes advantage of full-duplex Ethernet links

-

Client sends write request to Primary Replica

-

After Primary Replica finishes write operation

Primary Replica assigns serial number to the received write request and provides ordering (like Bayou)

Primary Replica forwards write request with same serial number to Secondary Replicas

-

Primary Replica waits for all Secondary Replicas to reply or timeout

Secondary Replica should reply only after they complete write or they can reply “error”

-

Primary Replica replies to Client (ok when writes in all replicas succeed; otherwise, client retry from step 3 to 7)

Record Append Operation

The control flow is similar to Write Operation

The differences are that

-

Client sends data to replicas of last chunk of file (because of append operation)

-

Client sends append request to primary

Cases

When append request fits in current last chunk (enough space to append):

-

Primary Replica appends data to own replica

-

Primary Replica tells Secondary Replicas to do same at same byte offset in theirs

-

Primary Replica replies with success to client

It is common case since the size of chunk (64 MB) is designed large enough based on analysis of workload

When append request NOT fit in current last chunk (NOT enough space to append):

-

Primary Replica fills current chunk with padding

-

Primary Replica instructs other replicas to do same

-

Primary Replica replies to client with “retry on next chunk”

such that client will retry operation if record append fails at any replica

However, this makes that replicas of same chunk may contain different data

(some succeed & some fail) —– Inconsistent

GFS guarantees that data have been appended at least once in atomic unit

when Primary Replica replies success

Record Append Semantics

Applications should include checksums in records they write using Record Append

- Reader can identify padding / record fragments using checksums

If application cannot tolerate duplicated records, should include unique ID in record

- Reader can use unique IDs to filter duplicates

Consistency Model

Namespace

Changes to namespace (i.e., metadata) are atomic

-

Easily done by single Master server

-

The Master server uses log to define global total order of namespace-changing operations (even after reboot)

Data

Define Consistent and Defined

-

Consistent: File region all clients see as same (same bits) regardless of replicas they read from

-

Defined: File region that is consistent, and all clients see what the mutation is in its entirety

Then,

-

When write or append operation fails, the file region will be inconsistent (some succeed & some fail)

-

When a serial write succeeds, the file region will be defined with same bits seen by clients

due to no concurrency & atomic write

-

When concurrent writes succeed, the file region will be consistent but undefined

due to Primary Replica chooses order of write operations might be unexpected across network (Not Recommended)

-

When a serial append succeeds or concurrent appends succeed,

the file region will be defined interspersed with inconsistent

due to append at least once in atomic (some succeed & some fail)

╔══════════════════════╦══════════════════════════╦═══════════════════╗

║ ║ Write ║ Append ║

╠══════════════════════╬══════════════════════════╬═══════════════════╣

║ serial success ║ defined ║ defined ║

╠══════════════════════╬══════════════════════════╣ interspersed with ║

║ concurrent successes ║ consistent but undefined ║ inconsistent ║

╠══════════════════════╬══════════════════════════╩═══════════════════╣

║ failure ║ inconsistent ║

╚══════════════════════╩══════════════════════════════════════════════╝

Delete Operation

When client deletes file

-

Master server records deletion in its log

-

File renamed to hidden name including deletion timestamp

Master server scans file namespace in background

-

Master server removes files with such names if deleted for longer than 3 days (configurable)

-

Master server erases in-memory metadata erased

Master server scans chunk namespace in background

- Master server removes unreferenced chunks from chunkservers

Master Reboot

-

Replays log from disk

-

Recovers namespace (directory) information

-

Recovers file-to-chunk-ID mapping

-

-

Asks chunkservers which chunks they hold

- Recovers chunk-ID-to-chunkserver mapping

-

If chunk server has older chunk, it’s stale

- Chunk server down at lease renewal

-

If chunk server has newer chunk, adopt its version number

- Master may have failed while granting lease

Chunk Server Failure

-

Master server notices missing heartbeats message

-

Master server decrements count of replicas for all chunks on dead chunk server

-

Master server vre-replicates chunks missing replicas in background

- Highest priority for chunks missing greatest number of replicas

Small Files

If storing a short executable in GFS, executing on many clients simultaneously

-

3 chunkservers storing executable overwhelmed by many clients’ concurrent requests

-

App-specific fix: replicate such files on more chunkservers; stagger app start time

Performance

Performance in GFS is explainable in Section 6

Summary

Success: used actively by Google to support search service and other applications

-

Availability and recoverability on cheap hardware

-

High throughput by decoupling control and data

-

Supports massive data sets and concurrent appends

Semantics not transparent to apps

- Must verify file contents to avoid inconsistent regions, repeated appends (at-least-once semantics)

Performance not good for all apps

- Assumes Large & Streaming Reads, Large & Sequential Writes (Appends) workload (no client caching!)