Distributed Shared Memory: Ivy

UCL Course COMP0133: Distributed Systems and Security LEC-05

Distributed Shared Memory

Problem, Goal, and Correctness

Problem

An application has a shared address space such that all memory locations accessible to all instructions

Divide code for application into pieces, and assign one piece to each of several computers on a LAN

However,

Each computer has own separate physical memory

Each piece of code may want to read or write any part of data

Where the data should be put? (which peice of data on which physical memory)

Goal

Shared contents divided across nodes (put memory of all nodes into one shared memory)

but programmer need not explicitly communicate among nodes (not need to know the location of data)

Correctness

Reason: Programmers want to be able to predict how CPU executes program (to write correct program)

Uniprocessor Correctness

Evaluate the correctness for each instruction separately

Each instruction takes machine from one state to another

e.g., LD (load) should return value of most recent ST (store) to same memory address

Correctness:

Execution gives same result as if one instruction is run at a time,

waiting for each to complete before next instruction

The problem is that modern CPUs do not execute instructions one-at-a-time in program order

-

Multiple instruction issue

-

Out-of-order instruction issue

However, modern CPUs must follow the uniprocessor correctness

Naïve Shared Memory

-

Each host with one CPU and connected by Internet

-

Each host has local copy of all memory

-

Reads local

-

Writes sent to other hosts (and execution continues immediately)

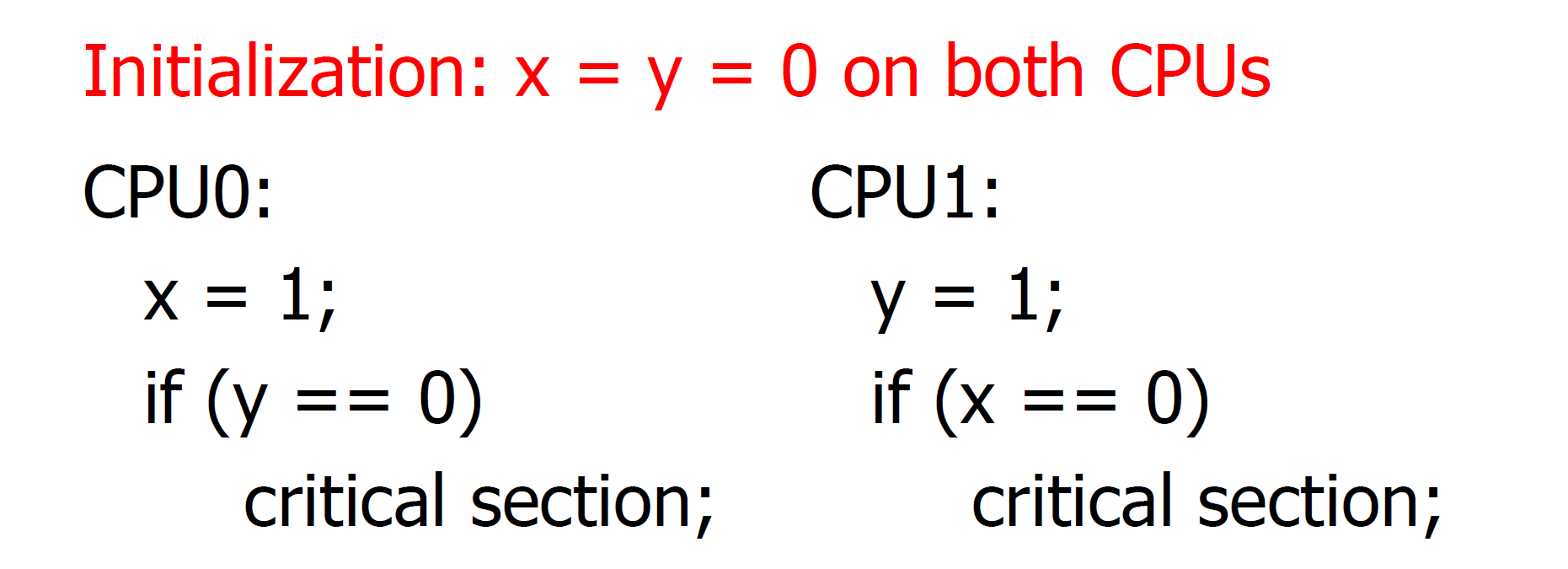

Mutual Exclusion Scenario

Critical Section: the section cannot be executed by more than one process/thread at a time

Correctness in Uniprocessor with two threads:

The scheduler runs each thread in the order of program and the memory is shared

Problem A in Naïve Shared Memory

Because remote writes are much slower than local reads

-

CPU0 sends write

x = 1, reads localy == 0 -

CPU1 reads local

x == 0before write sent by CPU0 arrives

The read/write order is wrong such that both CPU0 and CPU1 enter critical section

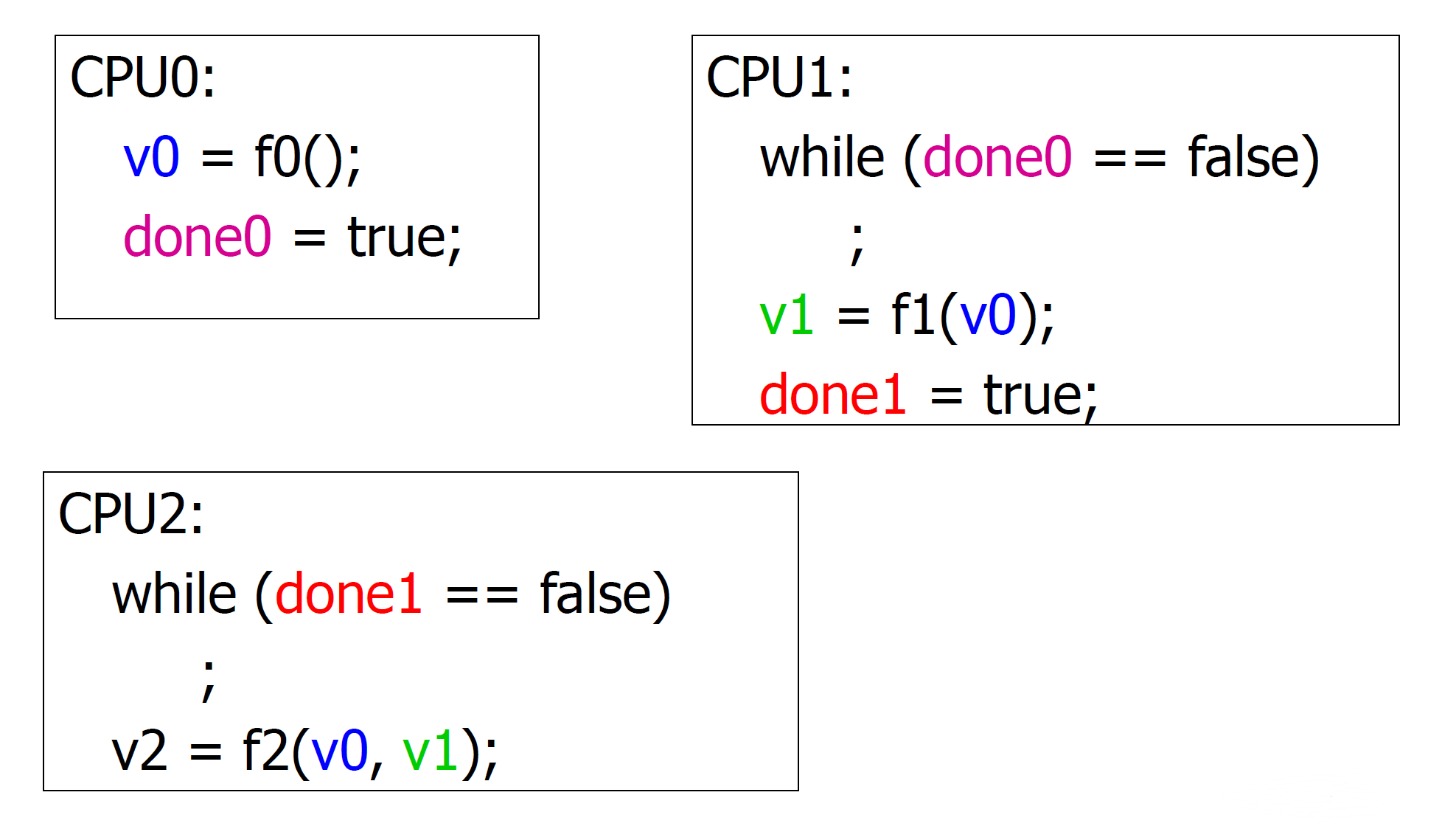

Data Dependencies Scenario

CPU1 depends on the done0 from CPU0,

CPU2 depends on the done1 from CPU1 (and done0 from CPU0)

Problem B in Naïve Shared Memory

CPU0 has two writes: v0 = f0() and done0 = true, but they might be reordered by network

such that the done0 = true but v0 does not hold the correct value

Problem C in Naïve Shared Memory

Even if each CPU sees writes from each other CPU in correct order

but CPU2 can see the writes from CPU1 before writes from CPU0

Correctness of Naïve Shared Memory

NOT CORRECT

Distributed Shared Memory

Consistency Model

Rules that distributed system will follow

However, these models are defined by human (have trade-offs: hard/easy, performance/semantics)

None should be perfect (elegant for the particular application)

Parallel Sorting Program

-

Load entire array into shared memory

-

Each host processes one piece of array

-

For the host

ia. sort own piece of array

b. set

done[i] = truec. wait for all

done[]to be trued. merge own piece of array with neighours

Fixed Apporach to Divide Shared Memory (Partition Address Space)

e.g., 1st MB on host0, 2nd MB on host1, 3rd MB on host2, and so on

Simply send all reads and writes to “owner” of address through network

Each CPU read- and write-protects pages in address ranges held by other CPUs

Detect reads and writes to remote pages with virtual machine hardware

Problem: cannot always predict which hosts will use which pages with complex memory allocation

Dynamic Apporach to Divide Shared Memory (Partition Address Space)

Single-Copy

Move the page to the reading/writing CPU each time it is used

CPU trying to read or write must find the current owner (the current location of page) then take page from it

Drawback:

reduce the performance since many CPUs read the same page

will not cause inconsistency (usually more read than write)

Multi-Copy

Move page for writes, but allow read-only copies

When CPU reads page that is not in its own local memory, find other CPU that most recently wrote to page

Works if pages are read-only and shared or read-write by one host

Problem: write sharing (false sharing)

Ivy using Centralized Manager

Overview

Each CPU has a ptable table with three attributes

-

lock: T (true), F (false)

-

access: R (read), W (write), nil (none)

-

owner: T (true), F(false)

Centralized Manager has a info table with three attributes

-

lock: T (true), F (false)

-

copy_set: list of CPUs with read-only copies

-

owner: CPU that can write the corresponding page

Messages Types

-

RQ (read query, reader to MGR)

-

RF (read forward, MGR to owner)

-

RD (read data, owner to reader)

-

RC (read confirm, reader to MGR)

-

WQ (write query, writer to MGR)

-

IV (invalidate, MGR to copy_set)

-

IC (invalidate confirm, copy_set to MGR)

-

WF (write forward, MGR to owner)

-

WD (write data, owner to writer)

-

WC (write confirm, writer to MGR)

Initialization

-

CPU0 owns (can write) the first page

-

CPU2 plays Centralized Manager role

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗

║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║

╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣

║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║

╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣

║ F ║ W ║ T ║ F ║ nil ║ F ║ F ║ nil ║ F ║ F ║ {} ║ CPU0 ║

╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣

║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║

╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝

Read Example

CPU1 wants to read the first page owned by CPU0

-

CPU1 (reader) lock the first page in ptable, and then send RQ to Centralized Manager

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ W ║ T ║ T ║ nil ║ F ║ F ║ nil ║ F ║ F ║ {} ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

Centralized Manager lock the first page and put CPU1 (reader) into copy_set in info, and then send RF to CPU0 (owner)

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ W ║ T ║ T ║ nil ║ F ║ F ║ nil ║ F ║ T ║ { CPU1 } ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

CPU0 (owner) lock the first page and change access to R in ptable, and then send RD to CPU1 (reader)

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ T ║ R ║ T ║ T ║ nil ║ F ║ F ║ nil ║ F ║ T ║ { CPU1 } ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After sending RD, CPU0 (owner) unlock the first page in ptable

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ R ║ T ║ T ║ nil ║ F ║ F ║ nil ║ F ║ T ║ { CPU1 } ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After receiving RD, CPU1 (reader) send RC to Centralized Manager

-

After sending RC, CPU1 (reader) change access to R and unlock the first page in ptable

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ R ║ T ║ F ║ R ║ F ║ F ║ nil ║ F ║ T ║ { CPU1 } ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After receiving RC, Centralized Manager unlock the first page in info

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ R ║ T ║ F ║ R ║ F ║ F ║ nil ║ F ║ F ║ { CPU1 } ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝

Write Example

CPU2 wants to write the first page owned by CPU0

-

CPU2 (writer) lock the first page in ptable,

and then send WQ to Centralized Manager╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ R ║ T ║ F ║ R ║ F ║ T ║ nil ║ F ║ F ║ { CPU1 } ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

Centralized Manager lock the first page in info,

and then send IV to all CPUs in copy_set╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ R ║ T ║ F ║ R ║ F ║ T ║ nil ║ F ║ T ║ { CPU1 } ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

All CPUs in copy_set lock the first page and change access to nil in ptable,

and then reply IC to Centralized Manager╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ R ║ T ║ T ║ nil ║ F ║ T ║ nil ║ F ║ T ║ { CPU1 } ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After replying IC, all CPUs in copy_set unlock the first page in ptable

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ R ║ T ║ F ║ nil ║ F ║ T ║ nil ║ F ║ T ║ { CPU1 } ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After receiving IC, Centralized Manager clear the copy_set in info,

and then send WF to CPU0 (owner)╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ R ║ T ║ F ║ nil ║ F ║ T ║ nil ║ F ║ T ║ {} ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After receiving WF, CPU0 lock the first page, change access to nil, change owner to F in ptable

and then reply WD to CPU2 (writer)╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ T ║ nil ║ F ║ F ║ nil ║ F ║ T ║ nil ║ F ║ T ║ {} ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After sending WD, CPU0 unlock the first page in ptable

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ F ║ nil ║ F ║ F ║ nil ║ F ║ T ║ nil ║ F ║ T ║ {} ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After receiving WD, CPU2 (writer) change access to W, change owner to T in ptable

and then send WC to Centralized Manager╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ T ║ nil ║ F ║ F ║ nil ║ F ║ T ║ W ║ T ║ T ║ {} ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After sending WC, CPU2 (writer & owner) unlock the first page in ptable

╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ T ║ nil ║ F ║ F ║ nil ║ F ║ F ║ W ║ T ║ T ║ {} ║ CPU0 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝ -

After receiving WC, Centralized Manager set the owner of the first page to CPU2 (writer & owner),

and then unlock the first page in info╔═══════════════════════╦═══════════════════════╦═══════════════════════╦═════════════════════════╗ ║ CPU0 - ptable ║ CPU1 - ptable ║ CPU2 - ptable ║ CPU2 - info ║ ╠══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦════════╦═══════╬══════╦══════════╦═══════╣ ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ access ║ owner ║ lock ║ copy_set ║ owner ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ T ║ nil ║ F ║ F ║ nil ║ F ║ F ║ W ║ T ║ F ║ {} ║ CPU2 ║ ╠══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬════════╬═══════╬══════╬══════════╬═══════╣ ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ... ║ ╚══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩════════╩═══════╩══════╩══════════╩═══════╝

Requirements of Write Operations

Invariants for tables

-

Centralized Manager must agree with CPUs about single owner of one page

-

Centralized Manager must agree with CPUs about copy_set who have read-only copies

-

Non-empty copy_set must agree with read-only for owner

and locking and unlocking protect atomicity of Write Operations

Sequential Consistency

Definition

-

All CPUs see results consistent with that total order

-

Each CPU’s instructions appear in order in total order

Requirements

-

Each CPU must execute reads and writes in program order, one at a time

-

Each memory location must execute reads and writes in arrival order, one at a time

Ivy Case Study

-

Meet the first requirement since

Each CPU must execute reads and writes in program order, one at a time

-

Meet the second requirement since

Each page should be read from the latest writer (owner in info) if not copied

Each page copies should be cleaned by all CPUs in the copy_set, and then the original will be written

Each memory location must execute reads and writes in arrival order, one at a time

Therefore, Ivy obeys sequential consistency (has correctness)

Performance

If x-axis is number of CPUs used, and y-axis is how many times faster the program run with such CPUs

Ideal Performance: Linear

Experiments include performance of PDE, matrix multiplication, (linear)

- Works can be done without data dependencies

and “block odd-even based merge-split algorithm” (worse than linear, flatten significantly beyond 2 CPUs)

-

Using parallel sorting algorithm

-

But sorting alogrithm is not good at parallelization (nearly sequencialization) because of waiting communication between CPUs

such that parallel sorting on a loosely-coupled multiprocessor is very difficult

Block Odd-Even based Merge-Split Algorithm

-

Partition data to be sorted over N CPUs, held in one shared array

-

Sort data in each CPU locally

-

View CPUs as in a line, number 0 to N-1

-

Repeat N times

-

Even CPUs send to (higher) odd CPUs

-

Odd CPUs merge, send lower half back to even CPUs

-

Odd CPUs send to (higher) even CPUs

-

Even CPUs merge, send lower half back to odd CPUs

-

-

“Send” means “Receiver reads from right place in shared memory”

Comparison: Ivy vs. RPC

Advantages in Ivy

-

More transparent (access the shared memory as in one box)

-

Easier to program (interaction is hidden)

Reasons for Multi-CPU PCs use Ivy-like protocols for cache coherence between CPUs

Advantages in RPC

-

Isolation

-

Control over communication

-

Latency-tolerance

-

Portability

Reasons for model for programming workstation cluster, especially communication dictates performance