Transparency and RPC

UCL Course COMP0133: Distributed Systems and Security LEC-04

Transparency in Distributed Systems

Retain “feel” of writing codes for “one box” when these codes run distributedly

since programmers start to write code for a single box when they are beginners

Goal

-

Preserve original and unmodified client code

-

Preserve original and unmodified server code

-

Glue together client and server without changing behaviours of either

-

Do not need to think about network

Local Procedure Call

-

Caller function pushes arguments onto stack

-

Program Counter (PC) jumps to address of callee function

-

Callee function reads arguments from stack

-

Callee function executes and puts return value in register

-

Callee function returns to next instruction in caller function

Remote Procedure Call

Goal

Let distributed programming “feel” no different from local procedure call

Abstraction

Servers export their local APIs to be accessible over the network

The procedure call in client side generates request over network to server

The called procedure executes in server side and returns the result over network to client

Implementation Problems

-

Different size of one data type (32-bit vs. 64-bit)

-

Different endianness of machines (little-endian vs. big-endian)

Requirement: a mechanism to pass procedure parameters and return values in machine-independent fashion

Solution: Interface Description Language (IDL)

When compiling the interface description file,

-

Specify types in native language used in one machine

-

Marshal native data types into machine-neutral byte streams for network

Unmarshal machine-neutral byte streams to native data types for local environment

-

Forward local procedure calls as requests to server by stub routines on client slide

e.g., IDL is XDR (eXternal Data Representation) for Sun RPC

Example: XDR for Sun RPC

proto.x defines API for procedure calls between client and server (a XDR file)

e.g.,

// addtwo.x

struct add_args {

int in1;

int in2;

}

program ADD_PROG { // program number

version ADD_VERS { // version number

int ADD (add_args) = 1; // procedure

} = 1;

} = 400001;

Note

-

The

intis defined by IDL (here is XDR) not by C -

Only one argument is allowed to used (using

structto combine different arguments) -

Program number is used to distinguish different RPC protocols (unique)

-

Version number is for different versions of one RPC protocol (support old and new versions)

-

Procedure number is used for the supplied procedure (unique)

When compiling proto.x, it will produce (in C)

-

proto.hPrototypes of RPC procedure, including data structure definitions of parameters and return values

-

proto_clnt.cClient stub code (one C function to one API function) to send RPC request to remote server

-

proto_svc.cServer stub code to dispatch RPC request to specified procedure defined on server

-

proto_xdr.cParameter and return value marshaling/unmarshaling routines, and byte order conversions

e.g.,

// server.c (proto_svc.c)

#include "addtwo.h"

#include <rpc/svc.h>

int *add_1_svc (add_args *argp, struct svc_req *rqstp)

{

static int result;

result = argp->in1 + argp->in2;

return &result;

}

Note

-

The procedure parameters and return values should be passed by pointers

-

Pointers point to their addresses such that declaring

staticis neededallocated on data segement not stack to guarantee long-time survived variable across all function invocations

-

Arguments to server-side procedures are pointers to temporary storage

to store arguments beyond procedure end, data (not merely pointers) must be copied (e.g.,

malloc())

Another example is Coursework 1: A Distributed Tickertape

NFS Case Study

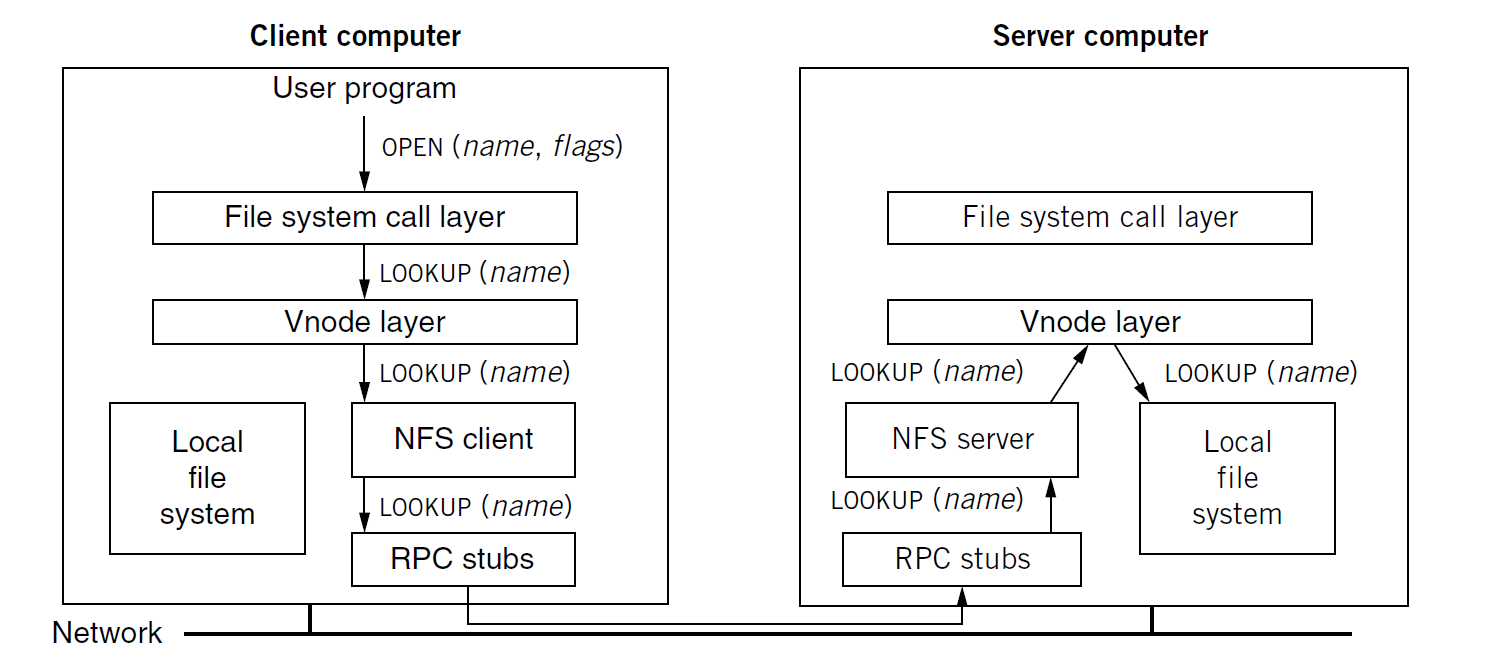

RPC Infrastrcture in NFS

NFS splits client at vnode interface

where NFS client contains the stub for system calls

Transparency in NFS

Client Syntax

NFS preserves the syntax of the client function call API

because parameters and return values of system calls are not changed in form or meaning

Server Filesystem Code

NFS requires some changes in the server filesystem code

-

Add in-kernel threads ( block on I/O to achieve good I/O concurrency)

-

Change implementation

-

File handles over wire, not file descriptors

-

Generation numbers added to on-disk i-nodes

-

User IDs carried as arguments, rather than implicit in process owner

-

Support for synchronous updates (e.g., for WRITE)

-

UNIX Filesystem Semantics

NFS does NOT preserve the UNIX filesystem semantics

Server Failure

open() fails in UNIX filesystem semantics only when the file does not exist

open() could fail in NFS when the server is died or could be retried forever (timeout should be set by client)

close() Failure

Because client WRITEs asynchronously when using close-to-open consistency

close() waits for server’s replies to those WRITEs (data safe on disk)

if server is out of disk space, the close() might fail

However,

close() never returns error in UNIX filesystem semantics

because applications on client will check both write() and close() for full disks

Errors Returned for Successful Operations

e.g.,

Client sends rename("a", "b") RPC to server

Server completes RENAME but fails before replying

Client sends rename("a", "b") RPC to server again

Server does not find the file “a” and returns an error

Hoever,

this never happens in UNIX filesystem semantics

but could happen in NFS because server is stateless

if stateful, server should first update the state then perform the operation

if server fails after updating, it still can perform when it comes alive by checking states

Deletion of Open Files

See Motivation of Generation Number, NFS server will return stale file handle error

Lesson: Trade-off between Semantics and Performance

Security

UNIX enforces read/write protections per-user

However,

NFS does NOT prevent unauthorized users from issuing RPCs to a NFS server

NFS does NOT prevent unauthorized users from forging NFS replies to a NFS client

Conclusion

For NFS

-

People fix programs to handle new semantics

-

People install firewalls for security

-

NFS still gives many advantages of transparent client/server

For Multi-Module Distributed Systems (symmetric interaction with many data types)

-

build system with different modules (each module in one address space)

-

represent user connections with object

-

pass object references among modules (using shared memory — Ivy)

Note

Without shared memory but send object contents

-

One module only knows the contents of passed connection

-

The connection might have changed (object reference is necessary)

NFS uses file handles (not object reference) to solve this problem but cannot help other situations

Failures in RPC

RPC can return failure instead of results

New Failure Modes

-

Remote server failure

-

Communication (network) failure

Possible Failure Outcomes

-

Procedure not execute

-

Procedure executed once

-

Procedure executed multiple times

-

Procedure partially executed

Solution: At Most Once Semantics

The RPC procedure can execute 0 or 1 time (but cannot more than one times)

-

Request message lost risk

client retransmit non-executed requests (no problem)

-

Reply message lost risk

client retransmit previously executed requests (but could cause multiple times of execution)

server can keep “replay cache” to reply to repeated requests without re-executing them